Beyond Collaboration

Reimagining Organizational Structures in the Age of Strong AI

A few days ago, I tried to reason about the organizational structures we can assume in case a breakthrough enables Strong AI/AGI. I’d seen attempts by various institutions and thinkers to reason about AI-human collaboration but felt them lacking.1 These frameworks each failed to account for the increasing general intelligence of AI models.

Upon further inspection, I quickly realized the herculean task of creating meaningful and long-lasting frameworks for human-AI collaboration in a world where AGI is between 5 and 20 years away, by the most skeptical estimates of those most qualified to know.2

The necessity of organizational frameworks for working with AI is obvious. We have powerful software that is helping individuals do a lot of knowledge work that would have required entire teams.

Many tasks that were considered beyond the reach of deep learning systems just a few years ago have succumbed to the field's rapid progress.

A 5 to 20-year estimate for the inception of AGI means dramatic social transformation.

While I don’t think one can precisely predict when or how scientific breakthroughs occur I do believe rapid progress in a field indicates potential breakthroughs. As Kurzweil has shown, you can make pretty solid predictions based on observing technological and scientific progress.3

If we are to witness AGI in the next few years, what then should our frameworks for AI-human collaboration look like?

In the rest of this piece, I will use AGI and Strong AI interchangeably.

What is AGI?

Let’s start with some definitions of AGI to ensure we’re all on the same page.

Definitions for AGI come in two flavors:

Systems that are better than humans at any economically relevant task.

A formal definition where the agent is the embodiment of general intelligence — capable of solving all problems in all domain spaces presented to it.

Any of these definitions being met will fundamentally alter the course of human history.4 Everything from what it means to be human, to the scarcity that we as humankind have viewed our world through will be seen through a different lens.

How does Strong AI impact naive collaboration frameworks?

These definitions alone(especially the second one) make it obvious why the presence of Strong AI makes naive perspectives about human-AI collaboration unlikely to work.

Given:

Y, the set of all problems in all domain spaces.

X⊆Y, where X represents problems with measurable economic impact.

A(Y) denoting that agent A can solve all problems in Y.

A(X) denoting that agent A can solve all problems in X.

That is to say, we can (and should expect) models embodying general intelligence to solve those economic tasks most dear to us. This is obvious but where people get lost is the failure to realize that even models of today show strong proficiency in a wide range of tasks.

Research shows current state-of-the-art models alone often outperform the collaboration of AI models and humans in operational environments.5 A study of joint doctor-model diagnostic reasoning unexpectedly found this to be the case.6

Human ego, skepticism of model outputs, and “narrowsightedness” all often get in the way of choosing great solutions depending on the strength of the model. Collaboration yields gains where models are weaker(models assume a tool-like utility) and losses where the models are superior in a problem domain.

As models steadily assume generality of prowess, encouraging a “human in a loop” approach only holds for two main reasons, both of which I will evaluate with respect to decision-making as an objective.

People might argue that human intuition, taste, and creativity are concepts quintessential and irreproducible in models but progress has shown that many of our before believed to be unique characteristics might be learned behaviors.7

Holding on to the “human in the loop approach” comes down to these two issues.

Accountability

The first reason is Accountability.

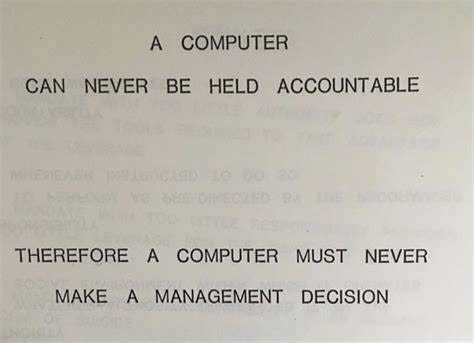

Who do we blame for a decision?

Much ink has been spilled about the image above but the desire to ensure perfect accountability in the use of Strong AI might come at the cost of less accurate decision-making.

Yes, there are ethical concerns that need to be evaluated before fully deploying Strong AI or even SOTA AI models of today for any form of decision-making. That said there are also considerations to be weighed about when it would be unethical to not deploy models as primary arbiters.

For example, if we have models capable of better than human judgment of health conditions it might be unethical to place a human in such a situation and have them be the final say of diagnosis knowing that the model is far more likely to get the diagnosis right.

Employment.

The second reason is our relationship with work.

We’ve organized societies around structures that solve tasks collectively. These organizations have allowed us to tackle tasks far greater in scope than any of us individually and we’ve derived a lot of meaning from this.

A lot of the desire to keep humans in the loop stems from a well-meaning desire to allay fears of mass unemployment. However mass unemployment is likely inevitable if we see an AGI enabling breakthrough in the next few years.

A more reasonable focus of study should be on the systems in place to ensure a meaningful human transition to a world post-AGI. Or better yet, more scenario planning around the pre and post-incidence of the invention of AGI.8

This means better economic models of post-scarcity, ensuring the well-being of workers impacted by Strong AI, mechanisms to ensure even distribution of the technology, alignment also becoming a study from an Organization Theory/Management Science perspective9, and better studies of socio-political games in information asymmetric and complete information environments with a focus on AI-human interactions.

So what might be better approaches for thinking about human-AI collaboration?

One approach with an eye out for Strong AI would be the abandonment of human-in-the-loop oriented frameworks and a reorientation towards organizations creating personalized benchmarks for their respective use cases with an emphasis on current human capability in comparison.10

The practice of benchmarking is currently limited to some AI labs and individual AI researchers as a way to evaluate model progress. But I believe this practice should escape containment. Benchmarks should be common practice more widely. Every organization or person doing some intellectual work should have personal benchmarks for tasks they feel are relevant to their day-to-day and test these benchmarks on state-of-the-art models to measure progress and areas of automation. I’d go so far as to say that benchmarks should be subject to economic study more deeply.11

Through this organizations can meaningfully evaluate what roles are better suited for AI models and can ride the curtails of progress and steady improvements in general reasoning capabilities in models.

Formulations of organizations as computation (because that is what they fundamentally are) and vice versa also seem to be promising avenues for novel thought.

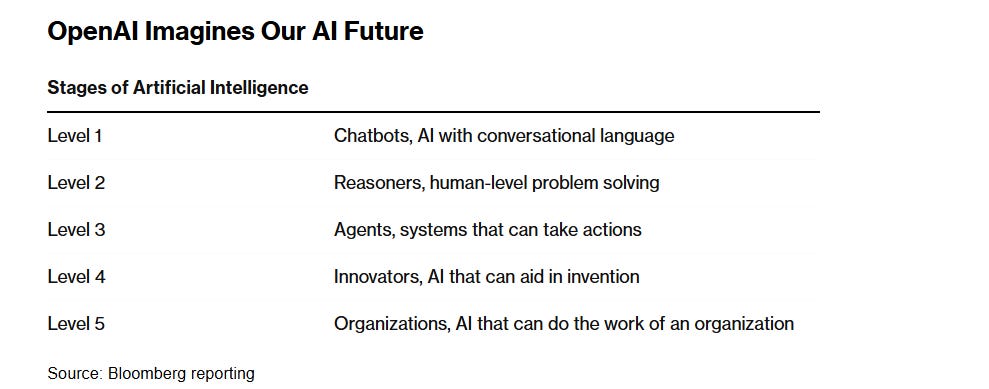

Similar approaches of thinking have been proposed by Vern L. Glaser, Jennifer Sloan, Joel Gehman(2024)12, and David Strohmaier(2021)13. OpenAI is also rumored to believe and aim for Strong AI that does the work of entire organizations.

What’s currently lacking in the proposed models of Gehman et al and Strohmaiaer as computation is the dynamism and agency Strong AI systems will possess.

That said I do not doubt that we will see more rigorous extensions of this thought and in their conception the creation of novel mechanisms to more meaningfully make use of aligned Strong AI models.

To summarize:

Any framework for AI-human collaboration not accounting for the increasing general intelligence of models is bound to collapse as humans become a bottleneck.

Benchmarking should become the norm in corporations. Efforts should be made to account for where rote tasks could already benefit from automation. There will be errors made but I argue a higher degree of accuracy and judgment is far more important than individual accountability/blameworthiness.

Reasoning about AI-human collaboration could benefit from more mathematical and empirical rigor.

https://hbr.org/2024/12/the-irreplaceable-value-of-human-decision-making-in-the-age-of-ai

A significant amount of researchers and leaders of top AI research labs believe AGI is anywhere from 5 to 20 years away. Dario Amodei, the head of Anthropic elegantly writes out what a manifestation of AGI in the next few years would mean for humanity. Sam Altman has a similar piece. It’s easy to mistake this for mere marketing. But we see researchers like Yann Lecun, who at first glance seem to have taken a more skeptical stance on current progress and approaches settle on the 5-10/5-20 estimate as well.

https://en.wikipedia.org/wiki/Technological_singularity

https://en.wikipedia.org/wiki/Artificial_general_intelligence

https://www.nature.com/articles/s41562-024-02024-1

The authors of this paper unexpectedly found that the LLMs alone outperformed the collaboration of LLMs and physicians in diagnostic reasoning. Funnily enough, they still conclude that we need more human-ai collaboration. Without clarifying what this entails we are bound to come short in creating efficient systems that adequately leverage generalized problem-solving agents.

https://www.nature.com/articles/s41598-024-76900-1

https://www.imf.org/en/Publications/fandd/issues/2023/12/Scenario-Planning-for-an-AGI-future-Anton-korinek

https://en.wikipedia.org/wiki/AI_alignment

https://hai.stanford.edu/what-makes-good-ai-benchmark

https://x.com/emollick/status/1868141914522034196

https://onlinelibrary.wiley.com/doi/full/10.1111/joms.13033?msockid=104031f5184a6a471ab92322192d6b99

https://www.researchgate.net/publication/349741938_Organisations_as_Computing_Systems